Lifecycle Management of DBpedia Neural QA Models

Hi everyone. This will be my final blog of GSoC’21, and this blog will discuss how I’ve achieved the lifecycle management of the DBpedia neural QA model and how to use this latest feature. Basically, this blog will cover the latest features of KBox, airML & NSpM and how to use those features properly.

In the past three months, I’ve been working on creating a framework to manage the lifecycle of NSpM Models under the supervision of Edgard Marx and Lahiru Oshara. In our first meeting, we created a roadmap specifying how to achieve and complete the project goals in time.

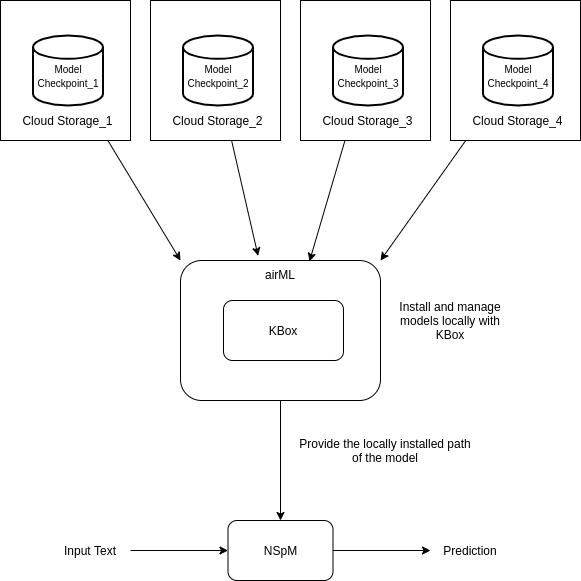

This was the high-level picture of how we were going to implement the model lifecycle framework. As you can see, rather than creating a model management framework from scratch, we have decided to use the existing KBox implementation to create the framework. KBox is a data management framework designed to facilitate data deployment, whether on the cloud, personal computers, or smart devices (edge). Therefore we’ve decided to use the features of the KBox to manage the AI models. So this KBox acts as the core of our implemented framework. Around the KBox, we have the airML, which is the model management framework. airML is a python wrapper of the KBox. It will give only the required features of KBox to manage a model. Then we can use the airML python package with NSpM to retrieve and manage models from anywhere. This is basically how the framework works underneath. Now let’s see the detailed version of the implementation.

KBox JSON Serialization

KBox JSON Serialization was the first task I had worked on. As you can see in the above high-level diagram, KBox is the core of the framework. Therefore I had to work on this feature first. As my mentors instructed me, we could not directly use the existing KBox implementation with airML. We had to make some modifications to it. The problem with the existing KBox is that it did not give a formatted output. For each KBox command, it gave an unformatted plain text output. It was really complex to write a logic to extract valuable information from this unformatted output. Therefore we have decided to format the output of the KBox first. So now, the KBox has the ability to provide the output of a KBox command as a JSON string. With this feature, airML can easily convert the output of the KBox into a JSON object and extract information from that object easily. Look at the following example,

java -jar KBox.jar listif we execute the above KBox command with KBox, we get an output like this,

KBox KNS Resource table list

##############################

name,format,version

##############################

http://purl.org/pcp-on-web/dbpedia,kibe,c9a618a875c5d46add88de4f00b538962f9359ad

http://purl.org/pcp-on-web/ontology,kibe,c9a618a875c5d46add88de4f00b538962f9359ad

http://purl.org/pcp-on-web/dataset,kibe,dd240892384222f91255b0a94fd772c5d540f38bwith the latest feature, we can get the output as follows,

java -jar KBox.jar -list -o json

{

"success": true,

"results": [

{

"name": "http://purl.org/pcp-on-web/dbpedia",

"format": "kibe",

"version": "c9a618a875c5d46add88de4f00b538962f9359ad"

},

{

"name": "http://purl.org/pcp-on-web/ontology",

"format": "kibe",

"version": "c9a618a875c5d46add88de4f00b538962f9359ad"

},

{

"name": "http://purl.org/pcp-on-web/dataset",

"format": "kibe",

"version": "dd240892384222f91255b0a94fd772c5d540f38b"

}

]

}We need to append -o json parameter at the end of the command. Then KBox will know that the user expects to get the output in JSON format and return it. You can read more about this feature from here.

Related PRs:

https://github.com/AKSW/KBox/pull/30

https://github.com/AKSW/KBox/pull/31

airML implementation

airML implementation was the second next task that I had been worked on. airML has already implemented some functions to execute KBox commands in the python environment. But there were some bugs and issues with that implementation due to the unformatted output of the KBox. Since I had already solved this problem with my earlier task, It was really easy for me to modify the airML to resolve these bugs and issues. Once I integrate the airML successfully with KBox, I started to work on a newer feature for airML, which is to execute KBox commands via airML directly through the terminal. You will get a better understanding of this feature from the following example.

- First of all, install the airML python package.

pip install airML2. Then open up a terminal and execute the KBox command as follows,

airML listoutput

KBox KNS Resource table list

##############################

name,format,version

##############################

http://purl.org/pcp-on-web/dbpedia,kibe,c9a618a875c5d46add88de4f00b538962f9359ad

http://purl.org/pcp-on-web/ontology,kibe,c9a618a875c5d46add88de4f00b538962f9359adairML list -o jsonoutput

{

"status_code": 200,

"message": "visited all KNs.",

"results": [

{

"name": "http://purl.org/pcp-on-web/dbpedia",

"format": "kibe",

"version": "c9a618a875c5d46add88de4f00b538962f9359ad"

},

{

"name": "http://purl.org/pcp-on-web/ontology",

"format": "kibe",

"version": "c9a618a875c5d46add88de4f00b538962f9359ad"

}

]

}

This is one of the cool features of airML. Apart from that, you can use this as a normal python package. To use the airML in a python environment, follow these instructions,

- Install airML python package

pip install airML2. Import the airML package

from airML import airML3. Call one of the provided functions here.

ex:

airML.list(False)You can read more about this airML newest features from here.

airML python package:

https://pypi.org/project/airML

Related PR(s):

https://github.com/AKSW/airML/pull/5

NSpM integration with airML

This was the last task of the roadmap. With the previous implementations of KBox and airML, now we have a framework that can manage the lifecycles of any machine learning model (Actually, airML can manage many types of data. We just have to add the relevant data inside the table.kns file here and airML and KBox together handle the rest for us).

With the previous implementation of the NSpM, if we want to get an output from a model, we have to download the model checkpoint (or train it locally) and provide the path to the model checkpoint as follows,

python interpreter.py --input <path_to_the_model_checkpoint> --output <output_directory> --inputstr "yuncken freeman has architected in how many cities?"with this implementation, we have to provide the path to the model checkpoint by ourself. Let’s say we have several models stored locally in our computer in several directories. Then we have to remember each model path separately and provide the paths to each model separately. Also, there might be several model checkpoint versions. We also need to handle the versioning of model checkpoints too. Last but not least are online storage. Some model checkpoints are stored in cloud storage. To use those, we need to download them, then extracted those and put those in relevant directories. These are some major issues that we had to deal with when we were using the NSpM.

Now, with the help of airML, we were able to solve all the above issues of NSpM. If you can remember, with the earlier implementation, we have to provide an argument called --input to provide the model path. With the latest implementation, you can use the --airml argument instead of the --input argument.

python interpreter.py --airml <model_id> --output <output_directory> --inputstr "yuncken freeman has architected in how many cities?"with the --airml argument, we have to provide the model id that we need to use. Model id is another name for Knowledge Name in KBox. You can see the details of available models here. Currently, we have only provided a model that trained with the art-30 dataset.

{"name": "http://nspm.org/art", "label":"DBpedia Art Dataset", "target": [[{"zip":[{"url":"https://www.dropbox.com/s/llx7ruy1bxflhvl/art_30.zip?dl=1"}]}]], "format":"nspm", "version": {"name":"1.0", "tags":["latest"]}, "desc": "The pre-trained NSPM DBpedia Art Dataset.", "owner":"NSpM", "publisher":"KBox team", "license":["http://en.wikipedia.org/wiki/Wikipedia:Text_of_the_GNU_Free_Documentation_License", "http://en.wikipedia.org/wiki/Wikipedia:Text_of_Creative_Commons_Attribution-ShareAlike_3.0_Unported_License"],"subsets": ["https://github.com/LiberAI/NSpM/blob/master/data/templates/Annotations_F30_art.csv"]}Above is the related Knowledge Name detail of the provided model. In there, we have to provide the http://nspm.org/art as the model_id. Then the relevant model checkpoint will be downloaded by the airML and provide the downloaded path for the NSpM to continue the execution. You can read more about this feature from here.

Related PR:

https://github.com/LiberAI/NSpM/pull/56

Additional Implementation

Now let’s see what the additional task I’ve been worked on during my GSoC time period are

- KBox Python package

This is one of the key features I have worked on that is not relevant to the model management framework. If you can remember, the airML is a python wrapper of KBox, which only provides the necessary functionalities of KBox to manage a model. There are other functionalities of KBox which isn’t exposed by this airML. Therefore we have decided to release another python wrapper for KBox to expose the full features of KBox. You can read more about this python package from here.

KBox python package: https://pypi.org/project/KBox - Blogs

While working on implementing the python framework, I have documented and wrote blogs about relevant features separately. You can read those blogs to get an in-depth knowledge of those features from here.

Future work

- Provide few other model checkpoints in

table.knsCurrently, we have only provided one model checkpoint intable.knsfile. We can provide several other model checkpoints which have been trained on other datasets as well. - Implement a functionally to push model checkpoints.

With the current airML implementation, we have to manually do that process if we want to add a model intotable.kns. First, we have to upload the model to online cloud storage. Then we need to get a public URL for that file. Then we have to add that intotable.kns. Then we can use that model with airML. We can introduce new functionality to automate all these steps. Then the user can upload their own checkpoint easily with a single method call.

This is basically what I have worked on during my Google Summer of Code time period. I really should be thankful to Mr.Edgard Marx & Mr.Lahiru Oshara for providing me this amazing opportunity. I had the chance to learn new skills, meet new friends, and make valuable open source contributions throughout my GSoC time period. From my personal experience, If you are a college student who read this blog, I strongly encourage you to apply for GSoC. It will definitely change your life!!!.